ML Model Governance & Compliance – Auditing, Explainability & Fairness in ML

ML Model Governance & Compliance – Auditing, Explainability & Fairness in ML

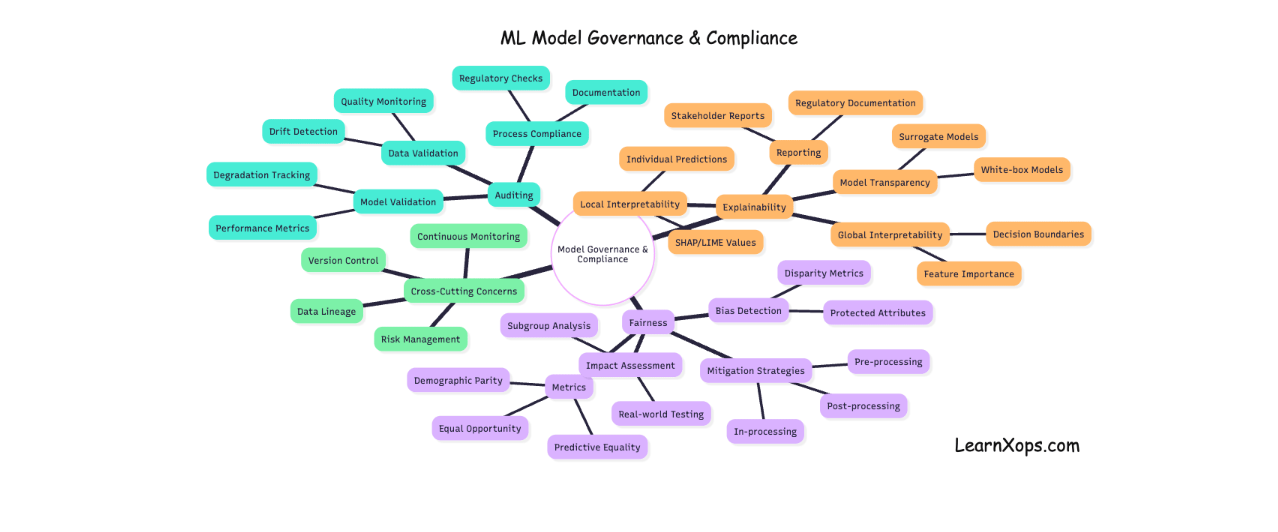

Ensure accountability, traceability, and fairness in machine learning systems, especially when handling sensitive or regulated data. Master auditing, explainability, and bias detection to build trustworthy and compliant AI solutions.

We should learn model governance and compliance to ensure accountability, traceability, and fairness in machine learning systems, especially when handling sensitive or regulated data. Mastering auditing, explainability, and bias detection helps build trustworthy and compliant AI solutions that align with legal and ethical standards.

📚 Key Learnings

- Understand the importance of governance in ML systems: accountability, traceability, compliance

- Learn how to audit model lineage and maintain version history

- Explore explainability tools (e.g., SHAP, LIME) to interpret black-box models

- Identify and address bias and fairness concerns in datasets and predictions

- Study compliance frameworks: GDPR, HIPAA, SOC2 as they relate to ML

🧠 Learn here

What is ML Governance?

Machine Learning (ML) governance is a set of processes and tools used to manage, monitor, and control ML models and pipelines throughout their lifecycle. With growing reliance on ML systems in production, ensuring proper governance becomes critical for maintaining ethical standards, regulatory compliance, and trustworthiness.

Importance of ML Governance

1. Accountability

- Assigns responsibility for decisions made by ML systems

- Tracks who built, trained, approved, and deployed models

- Ensures human-in-the-loop practices where needed

2. Traceability

- Enables version control for data, code, models, and configurations

- Keeps detailed audit logs for training datasets, parameter settings, and model outputs

- Facilitates debugging, auditing, and model performance tracking over time

3. Compliance

- Ensures adherence to legal and industry standards such as GDPR, HIPAA, or financial regulations

- Supports bias and fairness audits to meet ethical AI principles

- Documents model intent, assumptions, and validation checks for regulatory reporting

Key Governance Components

- Model Registry: Tracks model versions, metadata, lineage, and lifecycle stages

- Experiment Tracking: Logs experiments, hyperparameters, results, and artifacts

- Data Lineage: Maps how data moves and transforms through ML pipelines

- Access Control: Manages permissions for model training, deployment, and usage

- Monitoring & Alerting: Ensures models behave as expected post-deployment

- Explainability Tools: Improves transparency through SHAP, LIME, or similar tools

Auditing Model Lineage and Maintaining Version History

What is Model Lineage?

Model lineage refers to the ability to track the origin, movement, and transformation of data and ML models throughout their lifecycle.

Key Components:

- Source Data: Where and how the training data was generated

- Feature Engineering: Applied transformations and pipelines

- Model Training: Algorithms, hyperparameters, runtime environment

- Evaluation: Metrics, test datasets, and results

- Deployment: Where and how the model is deployed

Why Audit Model Lineage?

- Ensure compliance with regulatory standards (e.g., GDPR, HIPAA)

- Improve debugging and retraining processes

- Enable transparency for business and technical stakeholders

- Support reproducibility for experiments and deployments

How to Maintain Version History

Use a Model Registry

Tools like MLflow, SageMaker Model Registry, Neptune.ai, and Weights & Biases allow you to:

- Track model artifacts and metadata

- Compare versions with metrics

- Associate models with datasets, code versions, and environments

Semantic Versioning

Use tagging like v1.0.0, v1.1.0, etc. to indicate major, minor, and patch changes to models.

Git for Code & Pipelines

- Track changes in training and preprocessing scripts

- Link Git commit hashes to specific model versions

Store Metadata and Artifacts

- Save training config, hyperparameters, metrics, model weights, etc.

- Use S3, GCS, or Azure Blob for persistent model storage

Tools and Best Practices

| Tool | Purpose |

|---|---|

| MLflow | Model registry + tracking |

| DVC | Data version control |

| Git | Code and pipeline versioning |

| Kubeflow Pipelines | Workflow + lineage tracking |

| Neptune.ai | Metadata store & lineage UI |

✅ Auditing Checklist

- Are the training datasets versioned?

- Are the code and config files linked to each model version?

- Is the model registry in place and actively used?

- Are evaluation metrics logged and comparable?

- Is deployment history traceable to a model version?

Explainability Tools for Interpreting Black-Box Models

In modern machine learning, many high-performing models (e.g., ensemble methods, deep neural networks) act as black boxes, making it difficult to understand how they make predictions. Explainability tools help interpret these models, increase transparency, and build trust with stakeholders.

Key Tools for Explainability

1. SHAP (SHapley Additive exPlanations)

- Based on game theory and Shapley values

- Provides global and local interpretability

- Model-agnostic and model-specific versions available

How it works:

It attributes a prediction to individual features by considering the average marginal contribution of a feature across all possible combinations.

Advantages:

- Theoretically sound and consistent

- Supports visualization (force plots, summary plots)

- Handles feature interactions well

2. LIME (Local Interpretable Model-agnostic Explanations)

- Focuses on explaining individual predictions

- Builds an interpretable model locally around a prediction

- Perturbs input and observes changes in the output

How it works:

- Generates synthetic data points around the instance

- Fits a simple model to these points

- Interprets the original model by approximating it locally

Advantages:

- Simple to understand and implement

- Model-agnostic

- Provides interpretable weights for features

Getting Started

Installation

pip install shap limeExample Code (Python)

import shap

import lime

import lime.lime_tabular

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

# Load data and model

X, y = load_iris(return_X_y=True)

model = RandomForestClassifier().fit(X, y)

# SHAP explainability

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X)

shap.summary_plot(shap_values, X)

# LIME explainability

explainer = lime.lime_tabular.LimeTabularExplainer(X,

feature_names=load_iris().feature_names,

class_names=load_iris().target_names)

exp = explainer.explain_instance(X[0], model.predict_proba)

exp.show_in_notebook()When to Use What?

| Tool | Best For | Type | Pros | Cons |

|---|---|---|---|---|

| SHAP | Global + Local | Model-agnostic + Specific | Theoretically sound, consistent | Computationally expensive |

| LIME | Local | Model-agnostic | Lightweight, intuitive | May be unstable, approximation based |

Identifying and Addressing Bias and Fairness in Machine Learning

Machine learning models can unintentionally perpetuate or even amplify biases present in data, leading to unfair predictions. Addressing these concerns is essential for building responsible, ethical, and trustworthy AI systems.

What is Bias in ML?

- Bias in Data: Systematic skew in training data due to historical prejudice, underrepresentation, or measurement error

- Bias in Model: When the model learns and amplifies unwanted patterns that reflect data biases

What is Fairness in ML?

Fairness refers to the absence of any prejudice or favoritism toward an individual or group based on their inherent or protected characteristics (e.g., race, gender, age).

Types of Fairness:

- Demographic Parity: Predictions should be independent of protected attributes

- Equal Opportunity: True positive rates should be equal across groups

- Equalized Odds: Both false positive and true positive rates should be equal

- Counterfactual Fairness: Predictions should remain the same in counterfactual scenarios

How to Identify Bias

1. Data Auditing

- Analyze data distribution by subgroups

- Visualize imbalances using histograms or box plots

- Check for missing data in protected attributes

2. Model Auditing

- Evaluate model performance across demographic groups

- Use fairness metrics such as:

- Statistical Parity Difference

- Disparate Impact Ratio

- Equal Opportunity Difference

3. Toolkits for Auditing

- IBM AI Fairness 360 (AIF360)

- Fairlearn (Microsoft)

- Google What-If Tool

- BiasAudit (R package)

Techniques to Mitigate Bias

1. Preprocessing Methods

- Reweighing

- Data balancing (oversampling/undersampling)

- Fair representation learning

2. In-processing Methods

- Modify training algorithms to include fairness constraints

- Use fairness-aware loss functions

3. Post-processing Methods

- Adjust predictions to equalize performance across groups

- Reject option classification

Example (Using Fairlearn in Python)

from fairlearn.datasets import fetch_adult

from fairlearn.metrics import MetricFrame, selection_rate, equalized_odds_difference

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

X, y = fetch_adult(as_frame=True, return_X_y=True)

A = X['sex']

X_train, X_test, y_train, y_test, A_train, A_test = train_test_split(X, y, A, test_size=0.3)

model = LogisticRegression().fit(X_train, y_train)

y_pred = model.predict(X_test)

metrics = MetricFrame(metrics=accuracy_score, y_true=y_test, y_pred=y_pred, sensitive_features=A_test)

print("Accuracy by group:", metrics.by_group)

print("Selection rate:", selection_rate(y_pred, sensitive_features=A_test))Compliance Frameworks for Machine Learning: GDPR, HIPAA, SOC 2

Compliance frameworks provide legal and operational guidelines to ensure that machine learning (ML) systems manage data responsibly and protect user privacy.

1. General Data Protection Regulation (GDPR)

Applies to: Any organization that processes personal data of EU citizens.

Key Requirements for ML:

- Data Minimization: Only collect and process necessary data

- User Consent: Explicit consent required for data usage

- Right to Explanation: Users can request explanations for automated decisions

- Right to Erasure (Right to be Forgotten): Users can request deletion of their data

- Data Portability: Users have the right to download and transfer their data

ML Implications:

- Ensure model interpretability (via SHAP, LIME)

- Maintain data lineage and model versioning

- Enable opt-out from automated profiling

2. Health Insurance Portability and Accountability Act (HIPAA)

Applies to: U.S. healthcare organizations and partners handling Protected Health Information (PHI).

Key Requirements for ML:

- Data Security: Ensure PHI is encrypted and securely stored

- Access Control: Role-based access to sensitive data

- Audit Logs: Maintain records of data access and changes

- De-identification: Remove identifiable information for ML training

ML Implications:

- Use synthetic data or anonymized datasets

- Ensure secure storage and encrypted communication

- Monitor model behavior with audit trails

3. Service Organization Control 2 (SOC 2)

Applies to: SaaS providers and cloud service platforms handling customer data.

Key Requirements for ML:

- Security: Protect systems from unauthorized access

- Availability: Ensure reliable access to services

- Confidentiality: Protect confidential data in ML workflows

- Processing Integrity: Assure accurate data processing

- Privacy: Adhere to privacy practices in data collection and usage

🧠 In Short:

| Framework | Scope | ML Focus | Key Requirements |

|---|---|---|---|

| GDPR | EU data privacy | Data rights, transparency | Consent, erasure, explainability |

| HIPAA | US healthcare | PHI handling | De-identification, secure storage |

| SOC 2 | SaaS/cloud platforms | Secure ML systems | Access control, audit logging, privacy |

🔥 Challenges

Audit & Lineage

- Create a structured record for your model: who trained it, when, how, with what data

- Store lineage info (in Git, MLflow, or a YAML/JSON format)

- Add versioning logic and labels to models and datasets

Explainability

- Use SHAP or LIME on your classifier or regressor to visualize feature importance

- Generate explanation plots and logs for predictions

- Create an "Explain this prediction" endpoint in your API

Fairness & Compliance

- Create a synthetic biased dataset (e.g., salary prediction with gender imbalance)

- Use Fairlearn or custom Python code to analyze performance per group

- Document bias, mitigation strategies, and re-train the model with fairness constraints

- Draft a compliance checklist for your ML pipeline (logging, access, opt-out)