Feature Engineering & Feature Stores – Fueling ML with Quality Features

Feature Engineering & Feature Stores – Fueling ML with Quality Features

High‑quality, consistent features power great models. Feature stores enable reusability and consistency across training and serving.

Key Learnings

- What feature engineering is and why it’s crucial for ML success.

- Types of features and common transformations.

- Challenges in feature consistency across train vs inference.

- What feature stores are and how they help.

- Popular feature stores overview: Feast, Tecton, SageMaker Feature Store.

Learn: What is Feature Engineering?

Transform raw data into meaningful input features that improve model performance and generalization.

Why It’s Crucial

- Garbage in, garbage out — quality features drive model accuracy.

- Integrates domain knowledge and reduces noise.

- Improves generalization and reduces overfitting.

- Enables consistent training and inference pipelines.

Practical Example

Feature engineering on house_prices.csv including house age, size per room, one‑hot location, and scaling.

import pandas as pd

from sklearn.preprocessing import StandardScaler

# Load CSV

df = pd.read_csv('house_prices.csv')

# Create features

current_year = 2025

df['house_age'] = current_year - df['built_year']

df['size_per_room'] = df['size_sqft'] / df['bedrooms']

# One-hot encode 'location'

df = pd.get_dummies(df, columns=['location'], prefix='location')

# Normalize numerical features

scaler = StandardScaler()

df[['size_sqft', 'house_age', 'size_per_room']] = scaler.fit_transform(

df[['size_sqft', 'house_age', 'size_per_room']]

)

# Drop unused columns

df = df.drop(['built_year'], axis=1)

# Save

df.to_csv('house_prices_engineered.csv', index=False)

print("🧠 Final Feature Engineered DataFrame:")

print(df.head())Common Feature Types

- Numerical, Categorical, Ordinal, Binary

- Datetime, Text/NLP, Boolean

- Geospatial, Image/Audio, Sensor/IoT

Transforms

- Encoding, Scaling, Binning, Datetime extraction

- NLP tokenization, Log transforms, Interactions

- Imputation, Polynomial features, Quantiles

Challenges in Consistency (Train vs Serve)

- Code duplication across stacks causes drift.

- Data/feature drift over time degrades accuracy.

- Transformation mismatches at inference.

- Missing/late features, latency constraints, schema changes.

- Versioning and environment differences break reproducibility.

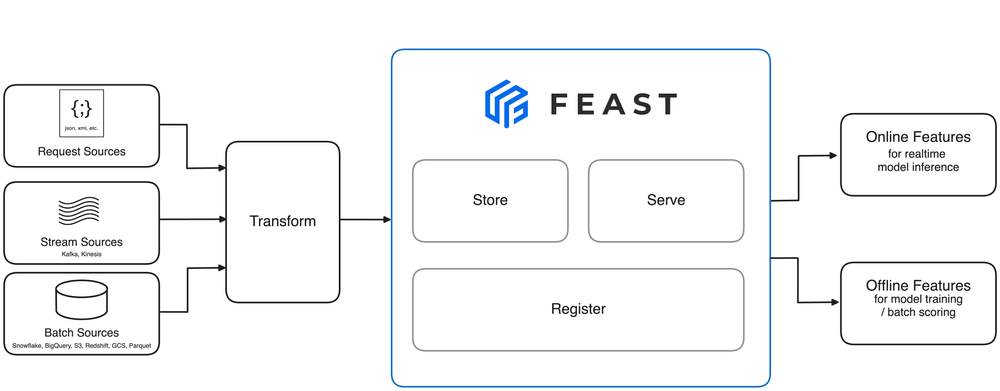

What is a Feature Store?

Central repository to define, manage, and serve features consistently for training and inference.

- Feature registry, ingestion pipelines

- Online store for low‑latency serving; offline store for training

- Transformation services, lineage, governance

Feast (Open Source)

Open-source feature store supporting batch and real‑time, with online/offline stores and a pluggable backend.

pip install feast

feast init feast_project

cd feast_project

# Define a FileSource and FeatureView (example)

from datetime import timedelta

from feast import Entity, FeatureView, Field, FileSource

from feast.types import Int64, Float64

engagement_source = FileSource(

path="customer_engagement.csv",

timestamp_field="signup_date"

)

customer = Entity(name="customer_id", join_keys=["customer_id"])

engagement_fv = FeatureView(

name="engagement_fv",

entities=["customer_id"],

ttl=timedelta(days=365),

schema=[

Field(name="last_login_days", dtype=Int64),

Field(name="num_sessions", dtype=Int64),

Field(name="avg_session_duration", dtype=Float64),

],

source=engagement_source

)

Tecton (Managed)

Enterprise managed feature store with declarative pipelines, lineage, monitoring, and both streaming/batch support.

- Automated transformations, versioning, governance

- Integrates with Snowflake, Spark, Kafka

SageMaker Feature Store

Fully managed AWS feature store with deep integration into SageMaker, IAM, encryption, and online/offline sync.

- Use within AWS‑native ML workflows

- CloudWatch observability and Glue/Athena integration

Challenges

- Perform basic feature engineering on a CSV dataset using Pandas.

- Use scikit‑learn pipelines to automate transformations.

- Install Feast, init a repo, and define a FeatureView.

- Simulate online/offline serving with Feast + SQLite.

- Write “Intro to Feature Stores with Feast + Python” in your README/blog.

- Try Feast with BigQuery or Redis as the online store.